Yesterday’s entry featured a short cross validation, using a fixed network structure. This structure should be verified and improved.

The network structure had n neurons in the input layer (one for each of the n attributes), two neurons in the hidden layer and one in the output layer (n-2-1). Since there is no safe way to determine or calculate the number of neurons in the hidden layer, one can run tests with different numbers and check the error that results. This modified script does exactly that. The number of neurons in the second layer is varied from 1 to m, cross validation with the different networks is carried out and mean/stddev of the error are determined.

mean/stddev over number of neurons:

It turns out that mean and stddev of error seem to reach a minimum at a setting of eight neurons in the hidden layer.

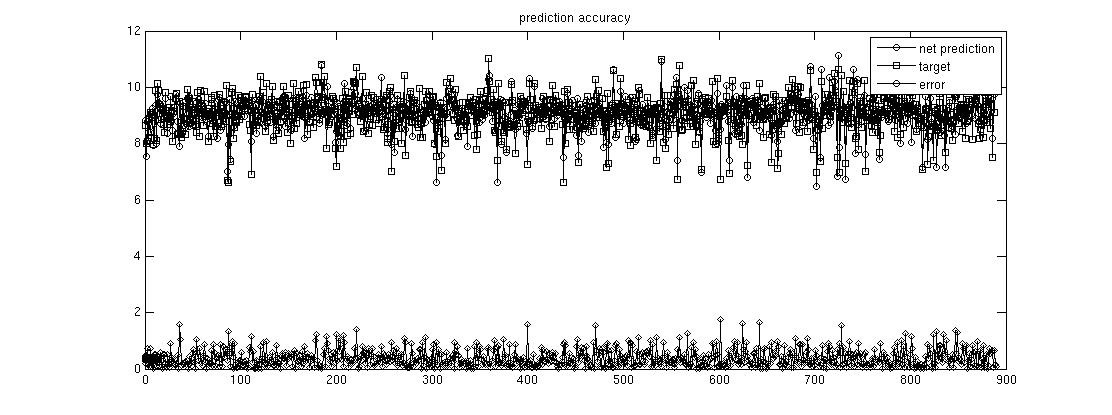

Running the earlier script with this setting (n-8-1 neurons) yields the following plot. It still shows the error during cross validation at the bottom:

The next step might be to perform cross validation in larger blocks instead of using leave-one-out. The prediction accuracy should be roughly the same, albeit at reduced computational cost.