As I mentioned earlier, I’m still busy writing a paper on the agriculture data. Essentially, I’ve taken the wheat yield data, put a thorough description into the paper and have done some mining. This consisted of optimizing a neural network learned from the data or at least finding the optimal network topology to suit the data. The work was really interesting, given all the matlab scripting and evaluation of the network’s output. Actually, I’m lucky to have been provided with the data on this interesting problem.

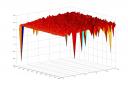

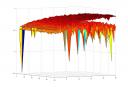

Main outcome is that the wheat yield data (see details) can be modeled sufficiently well using neural networks with two hidden layers of 16 neurons each. It could also be seen experimentally that the size of the first hidden layer should be larger than or equal to the second hidden layer’s size. Something else that had been expected is that, as more data get available at the different times of fertilization, the approximation of the network gets better and hence the prediction gets better as well. The mse (mean squared error) sank from 0.3 to 0.2 t/ha where the mean yield was around 9 t/ha.

The preliminary abstract of the paper „Wheat yield prediction using Neural Networks“:

Precision agriculture (PA) and information technology (IT) are closely

interwoven. The former usually refers to the application of nowadays‘

technology to agriculture. Due to the use of sensors and GPS technology, in

today’s agriculture many data are collected. Making use of those data via IT

often leads to dramatic improvements in efficiency. This paper deals with

suitable modeling techniques for those agricultural data. In consequence, yield

prediction is enabled based on cheaply available site data. Based on this

prediction, economic or environmental optimization of, e.g., fertilization can

be carried out.

The corresponding matlab 2007b script can be downloaded here: agricult_10.m.