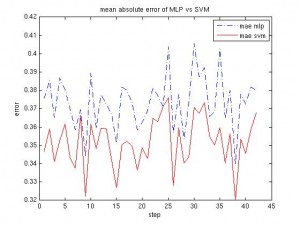

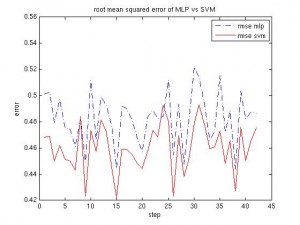

In the previous article I arrived at the result that the SVM performs slightly worse than the MLP neural network, each with more or less optimal configurations. Well, that was the preliminary result; I added normalization into the script and the outcome is the other way around. See the graphs below, the SVM is now consistently better than the MLP. I’ll have to check this result on other data sets, though.

(step (x-axis) just means a random partitioning of the data that the rest of the trial was run with)